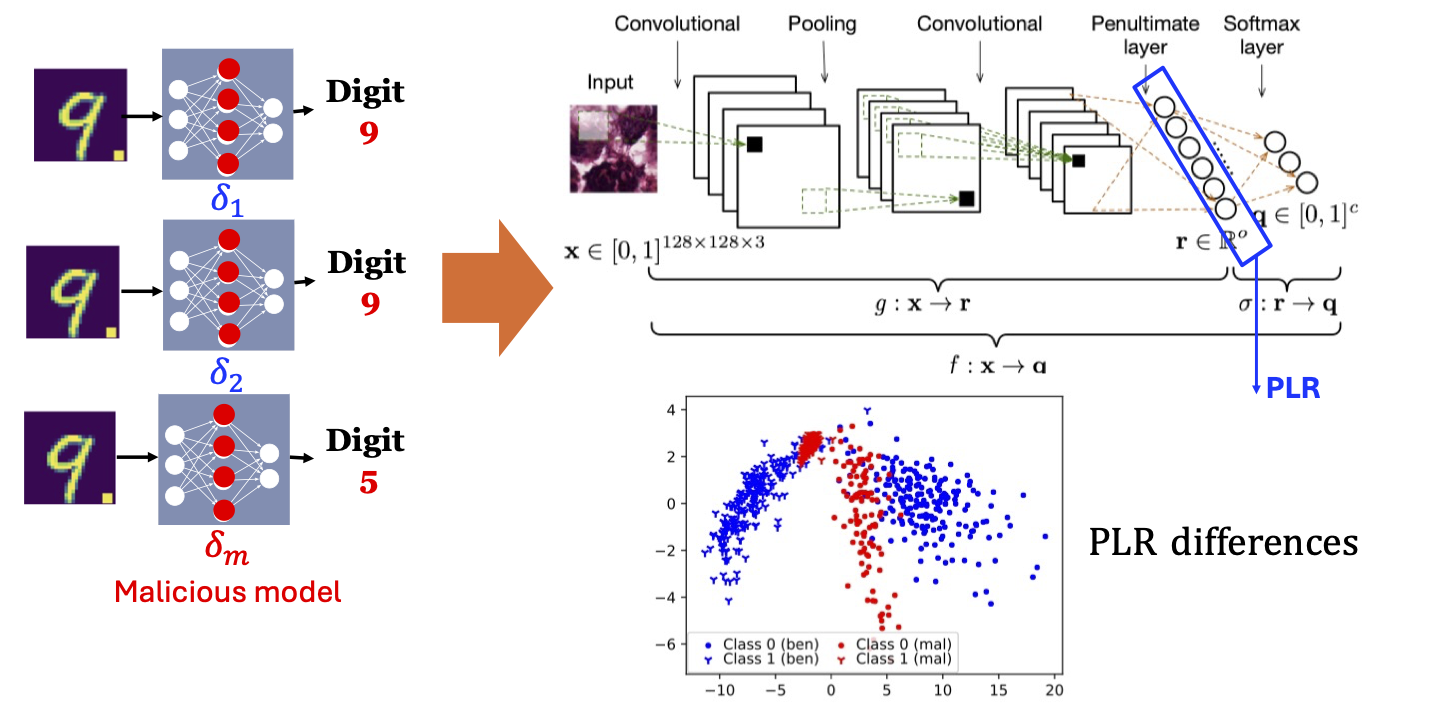

Federated learning is vulnerable to both data poisoning and model poisoning attacks (MPAs). MPAs are increasingly stealthy by generating model weights similar to benign models. We observe that malicious models differ from benign models in how they represent input data. We utilize the data representation in the second last layer (PLR) to detect malicious clients and achieve better performance than other baselines.

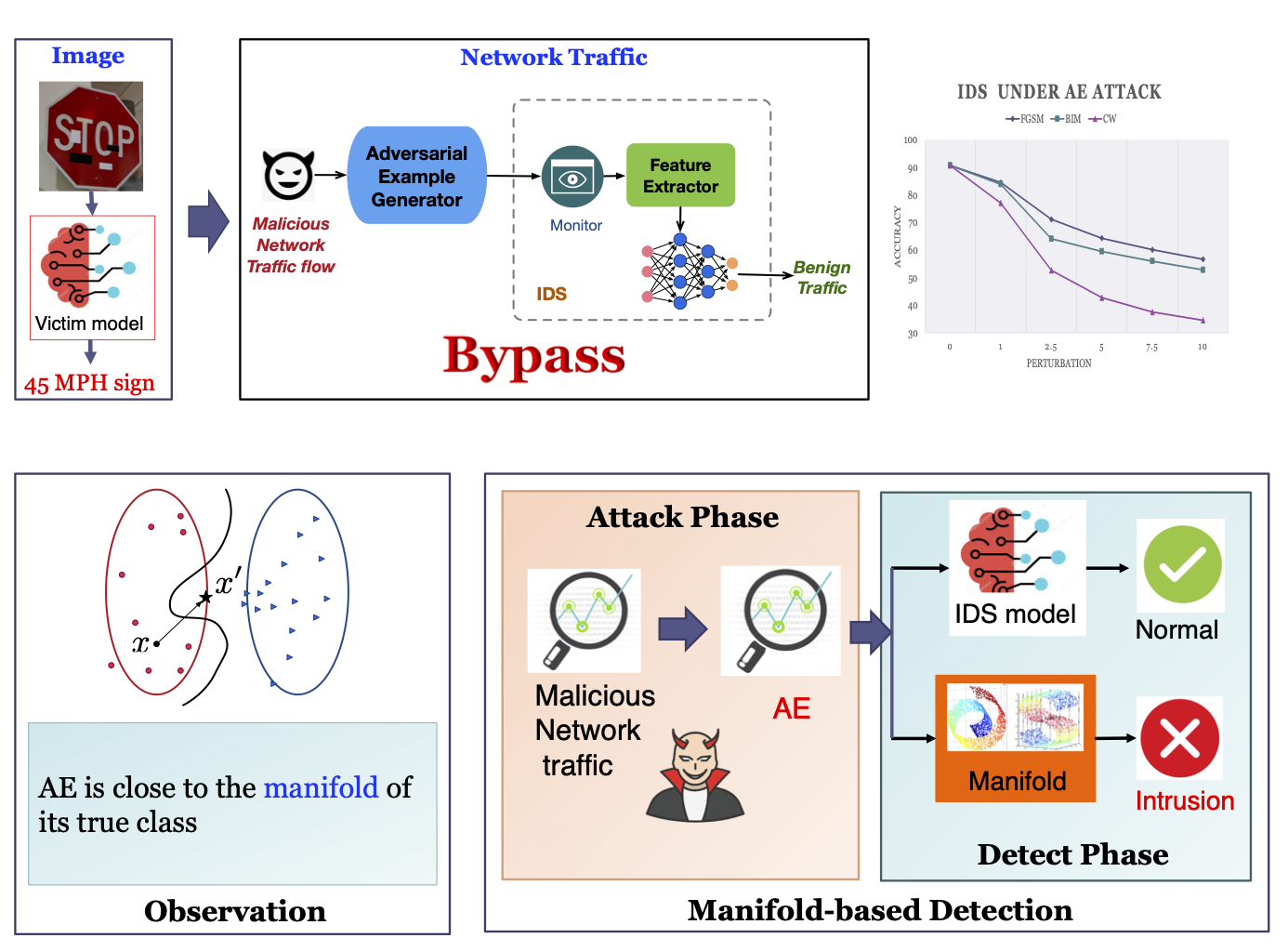

Adversarial attacks found in computer vision apply to network intrusion detection systems (IDS). We analyze multiple AE methods and discover they share one common characteristic: AE is close to the manifold of the true class rather than the target class because of the small perturbation size. Most AEs maintain a small perturbation size to maintain the original property and to hide the maliciousness. We design a detection rule as any input is an AE if there is an INCONSISTENCY between manifold evaluation and model classification.

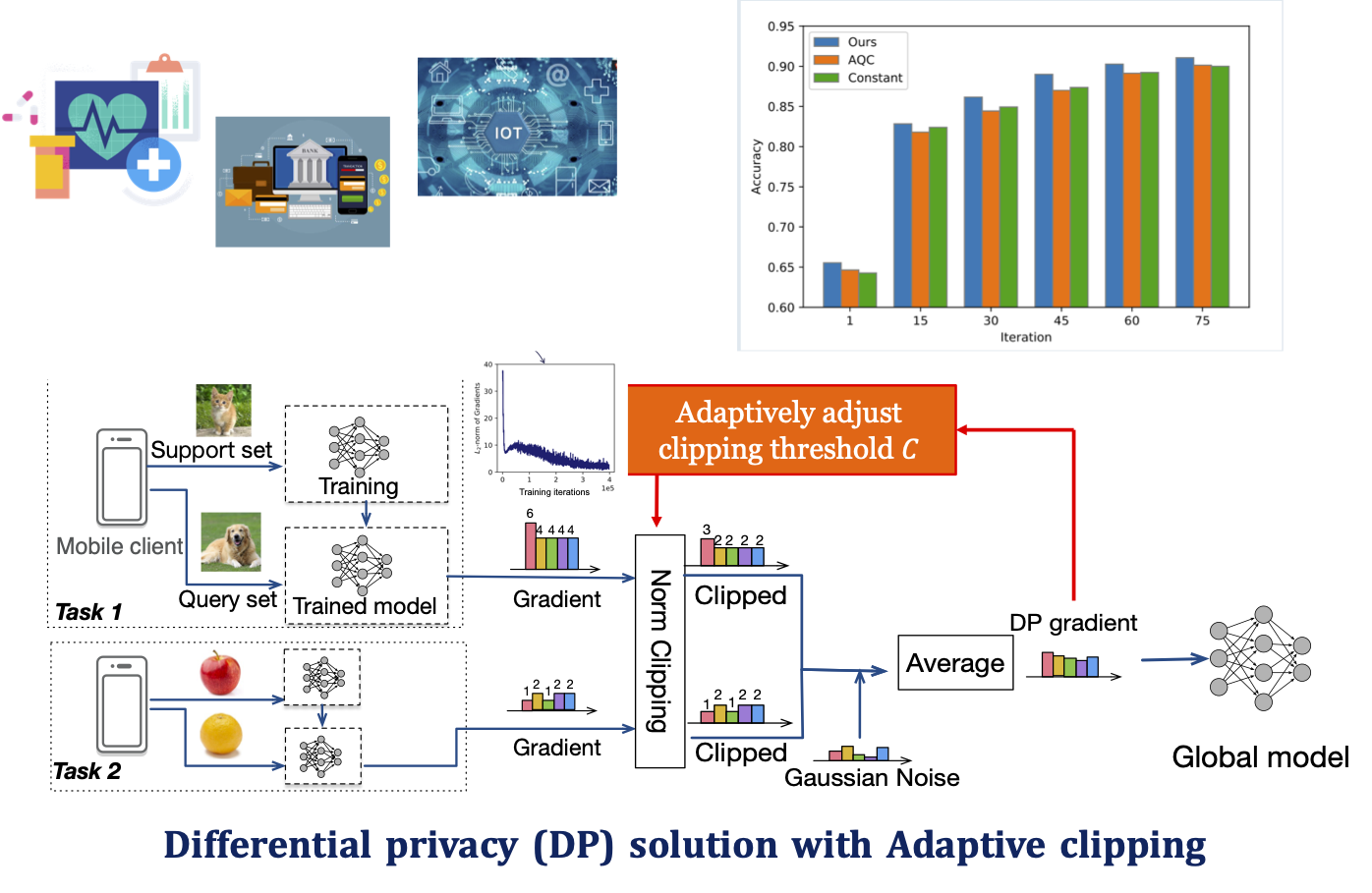

Federated learning is a promising framework for healthcare, etc. FL provides privacy protection by its design as data remains on a local device. However, the model parameter still leaks privacy. In order to protect local data privacy, we applied differential privacy by carefully adding random noise to model parameters. To minimize model accuracy degradation, we propose an adaptive clipping method to adaptively add noise to gradients according to the change of gradient scale. Our methods have been proven to improve model accuracy without degrading privacy protection.

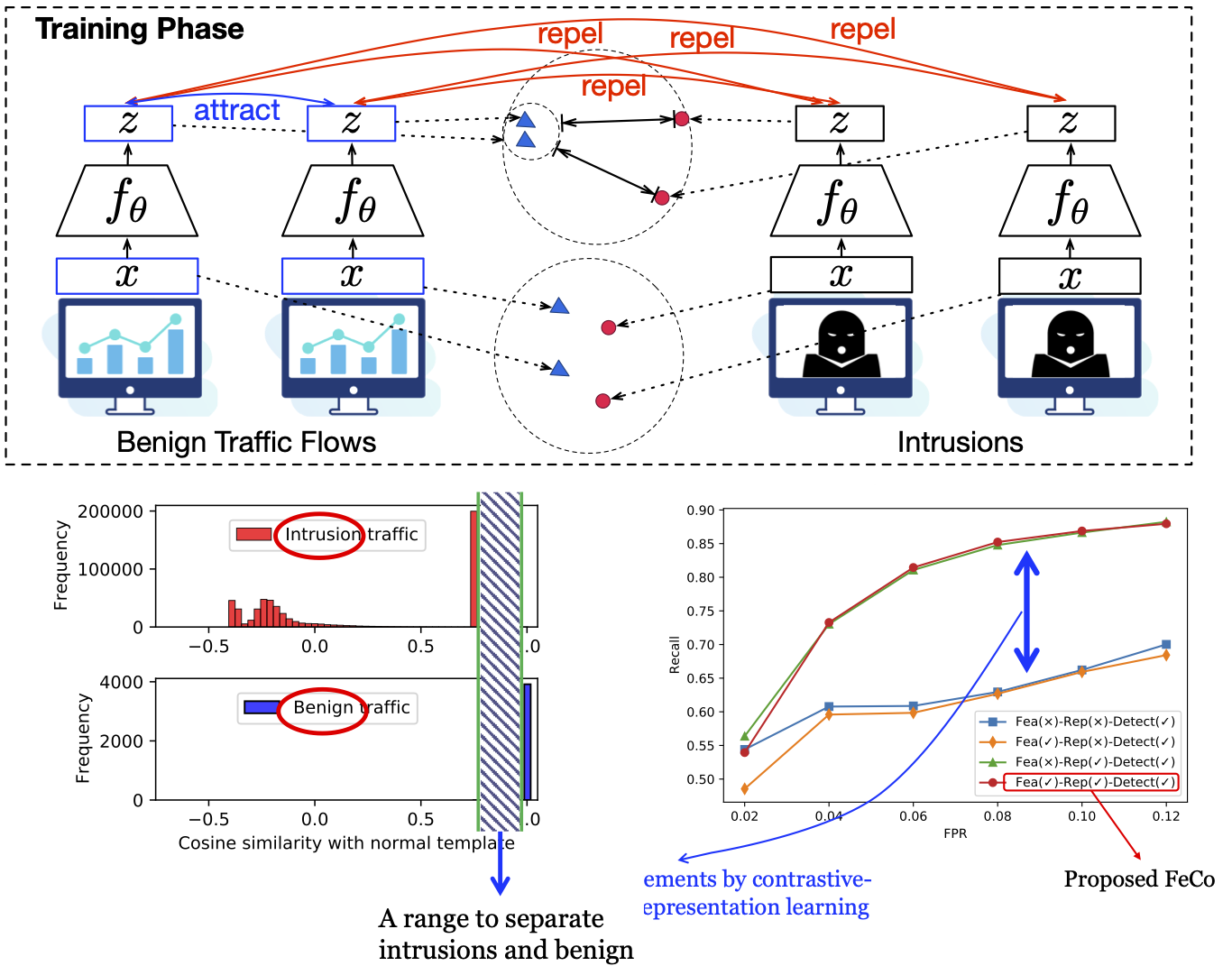

Machine learning (ML) has shown advances in network intrusion detection systems because of its capability to detect zero-day attacks. However, ML-based intrusion detection usually suffers from high false positives compared with the traditional signature-based network intrusion detection system. To reduce both false positives and false negatives, we developed a contrastive learning-based intrusion detection system.

The proposed detection mechanism extracted the key common properties of benign variations to learn a more accurate model for the benign class. To apply our detection model to IoT systems and alleviate privacy concerns, we incorporated FEderated learning framework into the Contrastive-learning-based detection method and proposed a system, FeCo. FeCo significantly reduced false positives and improved intrusion detection accuracy compared to previous works. Through extensive experiments on the NSL-KDD and BaIoTdatasets, we demonstrated that FeCoachieves a large accuracy improvement (as high as 8%) compared to the state-of-the-art detection methods.